I will be speaking at least once at the 2011 O'Reilly MySQL conference. I

have not heard back on all my proposed session but I will be talking

about Open Source BI tools. There are several great packages to help

DBAs make reports from their MySQL instances. These tools are great for

simple ad-hoc reports to highly detailed data dumps.

I am a MySQL Community Manager. Part of the job is working with you to help define MySQL future. Please feel free to let me know where you want MySQL to be next month, next year, and next decade. And the views expressed on this blog are my own and do not necessarily reflect the views of Oracle.

Wednesday, December 15, 2010

Speaking at the O'Reilly MySQL Conference - April 2011

Monday, November 29, 2010

MySQL 5.5 Authentication Goodies

MySQL 5.5 is currently in the Release Candidate phase and making good

progress on the way to being a Generally Available release. There

are many new features that will improve performance, make service

more robust, and generally make life better for DBAs. But since

5.5.7 was released for evaluation in October, there has not been a

lot of attention given to the changes in authentication.

To greatly simplify, MySQL has a table with a list of users and a

list of hosts from which those users are allowed access. So user

'jones' and the host they connect from are checked to make sure they

are allowed access. If they match, they can access the instance.

As of 5.5.7, MySQL authentication now supports pluggable

authentication and proxies. So now you can use PAM, Windows native

authentication, LDAP, or something similar to control user accounts.

Or use proxies for group of users. For example user 'smith' is in the

'programmer' group in the LDAP directory. But there is no entry in

the MySQL.user table for that user. The server will now use the

pluggable authentication to reference an outside authentication

service to determine the group. The connection will be established

with the USER() set to 'smith@host' and CURRENT_USER() set to

'programmer@%'. Please refer to

http://dev.mysql.com/doc/refman/5.5/en/proxy-users.html for details.

progress on the way to being a Generally Available release. There

are many new features that will improve performance, make service

more robust, and generally make life better for DBAs. But since

5.5.7 was released for evaluation in October, there has not been a

lot of attention given to the changes in authentication.

To greatly simplify, MySQL has a table with a list of users and a

list of hosts from which those users are allowed access. So user

'jones' and the host they connect from are checked to make sure they

are allowed access. If they match, they can access the instance.

As of 5.5.7, MySQL authentication now supports pluggable

authentication and proxies. So now you can use PAM, Windows native

authentication, LDAP, or something similar to control user accounts.

Or use proxies for group of users. For example user 'smith' is in the

'programmer' group in the LDAP directory. But there is no entry in

the MySQL.user table for that user. The server will now use the

pluggable authentication to reference an outside authentication

service to determine the group. The connection will be established

with the USER() set to 'smith@host' and CURRENT_USER() set to

'programmer@%'. Please refer to

http://dev.mysql.com/doc/refman/5.5/en/proxy-users.html for details.

Monday, November 15, 2010

New job as MySQL Community Manager, North America

As of today I am the MySQL Community Manager for North America. Part of the job description is work as part of a team that acts as the central resource and driving force for the design, process, manufacturing, test, quality and marketing of products(s) as they move from conception to distribution. So, you now have a focal point (me) to get your ideas into MySQL.

So help me gather and analyze information to define product specifications to move MySQL forward.

So help me gather and analyze information to define product specifications to move MySQL forward.

Thursday, November 4, 2010

Calpont InfiniDB 2.0 and BI QuickStarts

The 2.0 release of Calpont InfiniDB

is ready for download. New features for the columnar database storage engine for MySQL include data compression, fully parallelized & scalable UDFs, and partition drop has been added to the automatic vertical & horizontal data partitioning.

And for those of you new to data warehousing and business intelligence, there are QuickStart for data reporting tools from Japersoft, BIRT, and Pentaho plus a guide for using Pentaho spoon with the Calpont Infinidb bulk loader.

is ready for download. New features for the columnar database storage engine for MySQL include data compression, fully parallelized & scalable UDFs, and partition drop has been added to the automatic vertical & horizontal data partitioning.

- Benefits of InfiniDB Enterprise 2.0

- 20-50% query performance improvement when reading from disk

- Distributed in-database calculations provide greater flexibility to the data analyst, and enable faster performance for deep analytics

- Removing obsolete data from the database quickly frees up disk storage and improves query response

And for those of you new to data warehousing and business intelligence, there are QuickStart for data reporting tools from Japersoft, BIRT, and Pentaho plus a guide for using Pentaho spoon with the Calpont Infinidb bulk loader.

Thursday, October 21, 2010

Who certified MySQL what?

Some of you in the MySQL world may have missed FAQ: Sun Certifications and Oracle-Branded Certificates from the Oracle Certification Blog. The synopsis is that anyone earning a MySQL certification before September 1st will be a SUN Certified MySQL {associate|DBA|Cluster DBA|Developer}. And starting September 1st you will be an ORACLE Certified MySQL {associate|DBA|Cluster DBA|Developer}. There is no need to replace your old certificates to get the Oracle branding and will continue to be recognized under the Oracle certification program and will be valid without expiration.

Want a new certificate anyway? Well, Oracle is planning an upgrade path in the future. Please see the linked article above for details.

Want a new certificate anyway? Well, Oracle is planning an upgrade path in the future. Please see the linked article above for details.

Tuesday, October 5, 2010

Time to try Drizzle

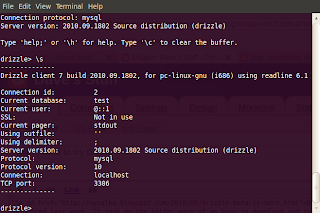

The Drizzle Beta was announced last week. It took me the better part of an hour to download and install Drizzle, mainly apt-geting packages not on the fresh install of Ubuntu I was using. Be sure to read the Drizzle Wiki on how to start Drizzle after building.

Anyone who has built MySQL or Postgres from source can build Drizzle. Anyone who has not done so needs to pay attention to the output of the make command and read through the documentation.

The default storage engine is the InnoDB plugin. The client program (pictured) will look familiar to MySQL-ers. Drizzle worked as expected on the simple tasks it perform. And now that I have driven it around the block, I will have to take it for a longer trip this weekend.

So if you have a few spare cycles and are curious, give Drizzle a spin.

Anyone who has built MySQL or Postgres from source can build Drizzle. Anyone who has not done so needs to pay attention to the output of the make command and read through the documentation.

The default storage engine is the InnoDB plugin. The client program (pictured) will look familiar to MySQL-ers. Drizzle worked as expected on the simple tasks it perform. And now that I have driven it around the block, I will have to take it for a longer trip this weekend.

So if you have a few spare cycles and are curious, give Drizzle a spin.

Wednesday, September 29, 2010

Pentaho Kettle Solutions

I have several favorite authors -- Tim Dorsey, Clive Cussler, and few others that I buy their latest book just because I trust the quality of their work. Now on that list are Roland Bouman, Jos van Dongen, and Matt Casters. In a follow up to Bouman's and van Dongen's Pentaho Solutions: Business Intelligence and Data Warehousing with Pentaho and MySQL, the have now produced Pentaho Kettle Solutions which explores the often murky world of ETL and data integration.

I have several favorite authors -- Tim Dorsey, Clive Cussler, and few others that I buy their latest book just because I trust the quality of their work. Now on that list are Roland Bouman, Jos van Dongen, and Matt Casters. In a follow up to Bouman's and van Dongen's Pentaho Solutions: Business Intelligence and Data Warehousing with Pentaho and MySQL, the have now produced Pentaho Kettle Solutions which explores the often murky world of ETL and data integration. Kettle can be confusing as there are many components with names such as spoon and pan but these tools are valuable to any DBA who has to feed data into an instance. If you have a data ware house the tools are invaluable.

This book covers initial steps with Kettle to cleaning up the worst raw data and even getting the information into the now ubiquitous cloud. Having stumbled along with a few tutorials and the Kettle docs, I can testify that this book is a must have for anyone wanting to use Kettle to feed a database. The text is clear, the example concise, and the book progresses logically.

Kettle is wonderful for modifying raw data before being sent to your database. need to clean up telephone numbers from xxx-xxx-xxxx, (xxx) xxx-xxxx, or xxxxxxxxxx in your format of choice? Or are state or country abbreviations (Ca, CA, Cal., & California) causing problems. Then you need Kettle and this book.

Wednesday, September 15, 2010

MySQL Certification Study Group in Austin

It looks like the good folks in Austin are keeping busy:

Announcing a new Meetup for The Austin MySQL Meetup Group!

What: GeekAustin Fall MySQL Happy Hour

When: Wednesday, September 22, 2010 6:30 PM

Where: Triumph Cafe

3808 Spicewood Springs Rd

Austin, TX 78759

512-343-1875

Mark and Boyd just finished GeekAustin's 10 week MySQL Associate Certification Study Group, and now we're wondering what should be next. Boyd would like to lead a study group for the MySQL Developer I exam, and Mark would like to host a few meetups on replication strategies and other topics of interest.

The Austin MySQL Meetup and the GeekAustin MySQL SIG are hosting a happy hour next Wednesday, September 22, to meet with everyone and find out what sort of events/study groups people would like to see in the coming months.

The happy hour will be hosted at Triumph Cafe on Anderson Lane.

Austin has a great MySQL community. If you're looking to sharpen your skills, or just spend an evening with folks who share your interest, come join us.

RSVP at EventBrite:

http://mysqlhappyhour.eventbrite.com/

RSVP to this Meetup:

http://www.meetup.com/mysql-36/calendar/14779596/

Announcing a new Meetup for The Austin MySQL Meetup Group!

What: GeekAustin Fall MySQL Happy Hour

When: Wednesday, September 22, 2010 6:30 PM

Where: Triumph Cafe

3808 Spicewood Springs Rd

Austin, TX 78759

512-343-1875

Mark and Boyd just finished GeekAustin's 10 week MySQL Associate Certification Study Group, and now we're wondering what should be next. Boyd would like to lead a study group for the MySQL Developer I exam, and Mark would like to host a few meetups on replication strategies and other topics of interest.

The Austin MySQL Meetup and the GeekAustin MySQL SIG are hosting a happy hour next Wednesday, September 22, to meet with everyone and find out what sort of events/study groups people would like to see in the coming months.

The happy hour will be hosted at Triumph Cafe on Anderson Lane.

Austin has a great MySQL community. If you're looking to sharpen your skills, or just spend an evening with folks who share your interest, come join us.

RSVP at EventBrite:

http://mysqlhappyhour.eventbrite.com/

RSVP to this Meetup:

http://www.meetup.com/mysql-36/calendar/14779596/

Thursday, September 9, 2010

Oracle Certified MySQL Certifications

I may no longer be in charge of MySQL Certification but it does not mean I am not looking out for those of us with those certs. I am still moderating the MySQL Certified Professionals Group on LinkedIn and running the North Texas MySQL USers Group. But I missed Oracle University re-branding the Sun certifications as Oracle certifications earlier this month. This includes the MySQL certifications that were re-branded as Sun.

And for those wanting to take the certification exams, they retain the Prometric numbers set up by Sun. But you will find them listed as:

And for those wanting to take the certification exams, they retain the Prometric numbers set up by Sun. But you will find them listed as:

310-810 - Oracle Certified MySQL 5.0 Database Administrator Part 1 310-811 - Oracle Certified MySQL 5.0 Database Administrator Part 2 310-812 - Oracle Certified MySQL 5.0 Developer Part 1

310-813 - Oracle Certified MySQL 5.0 Developer Part 2

310-815 - Oracle Certified MySQL 5.1 Cluster Database Administrator

310-814 - Oracle Certified MySQL Associate

Wednesday, August 11, 2010

Redis -- key pair and replication

DBAs seem to be getting hit over the head with the NoSQL message while trying to keep their SQL systems going. SQL does have its place in the world1 and much of the NoSQL push seems to be a way to get past some of SQL's limitations. But simply moving from a proven technology and infrastructure to something new with fad overtones is not going to make life easier for Joe Average DBA. Redis is one of those project that will get notice from a lot of DBAs looking for a very fast key-value datastore.

Redis keeps the dataset in memory but writes to disk asynchronously and reloaded when Redis is restarted. Or the data can be saved each time a command is issued or on schedule to minimize data loss.

Redis also has master-slave replication and setup consists of a 'slave of x.x.x.x' line in the slave's config file. And is the only trivial thing about Redis.

The Command Reference shows a lot of thought and hard work has gone into Redis. Redis has a lot to offer Joe Average DBA without making him forsake his comfortable base.

Redis keeps the dataset in memory but writes to disk asynchronously and reloaded when Redis is restarted. Or the data can be saved each time a command is issued or on schedule to minimize data loss.

Redis also has master-slave replication and setup consists of a 'slave of x.x.x.x' line in the slave's config file. And is the only trivial thing about Redis.

The Command Reference shows a lot of thought and hard work has gone into Redis. Redis has a lot to offer Joe Average DBA without making him forsake his comfortable base.

- SQL is not going away. And not like COBOL, FORTRAN or I Love Lucy not going away. SQL solves too many problems to be pushed aside. It does have limitations but cleaver engineering will mitigate them.

Friday, August 6, 2010

Open Source BI Report tools

I am going to take a mulligan on a previous Blog Post on Open Source BI reporting tools. Part of my job at Calpont is getting familiar with tools folks with use with the InfiniDB storage engine. After all, what is the point of having all those terabytes in a data warehouse if you can not produce some sort of report from them. Three popular open source BI reporting tools are available from BIRT, Pentaho, and Jaspersoft.

Proceeding alphabetically, BIRT is part of the Eclipse IDE world. A BI report is a new project or new report under a project. And if you like Eclipse or Java IDEs, then you will probably like BIRT for reports.

Pentaho's Rerport Designer is a stand alone program. As is iReport from Jaspersoft.

I had no problems connection to a data mart in a InfiniDB instance with the old JDBC connection with any of the products. And all three produced reports of various levels of complexity from my SQL. Plus you can pretty much format the exams to your heart's content.

So which is better? That would be up to the user having to run the software. For those of you really interested, there will be quick start guides up soon on each of the three BI tools on the Calpont InfiniDB website. If I missed your favorite BI reporting tool, please let me know.

Now I am digging through the ETL tools. I was getting a millions and a half rows a second into an InfiniDB table using the cpimport tool. Now to get the tools and cpimport working together!

Proceeding alphabetically, BIRT is part of the Eclipse IDE world. A BI report is a new project or new report under a project. And if you like Eclipse or Java IDEs, then you will probably like BIRT for reports.

Pentaho's Rerport Designer is a stand alone program. As is iReport from Jaspersoft.

I had no problems connection to a data mart in a InfiniDB instance with the old JDBC connection with any of the products. And all three produced reports of various levels of complexity from my SQL. Plus you can pretty much format the exams to your heart's content.

So which is better? That would be up to the user having to run the software. For those of you really interested, there will be quick start guides up soon on each of the three BI tools on the Calpont InfiniDB website. If I missed your favorite BI reporting tool, please let me know.

Now I am digging through the ETL tools. I was getting a millions and a half rows a second into an InfiniDB table using the cpimport tool. Now to get the tools and cpimport working together!

Thursday, July 29, 2010

North Texans -- vote for your new meeting location

The North Texas MySQL Users Group is now a special interest group withing the Dallas Oracle Users Group. As such, we can meet in oracle's offices in Plano or Irving. In the past there has been demand for meetings in the northern part of the Metroplex and demand for meetings more near the DFW Airport. So we can meet in either office or alternate between the two. Please state you preference by voting on the North Texas MySQL Users Group website.

Tuesday, July 13, 2010

Open Source BI -- Pentaho and Jaspersoft Part I

Hey DBAs! Are you seeking more efficient ways of shifting through your data to aid your business operations? Two popular Business Intelligence products have community Open Source software are Pentaho and JasperSoft. And both work with MySQL.

Both are easy to download and install. Both will use a JDBC connector to connect to MySQL. But how easy are the two to configure and run a simple report against a running instance of MySQL?

Setting up a JDBC connection with JasperSoft or Pentaho is pretty much like using any other JDBC connection.

The next step is to setup a query like SELECT name, job_title, department FROM employees, departments WHERE employees.emp_id = departments.emp_id. Either package will let you pick a variety of output templates. Then you have the BI software merge your query with the template. I honestly think an average MySQL DBA could fairly quickly generate a nice looking report from their instance and that JasperSoft would be just a little bit faster.

In part two of this series, the steps will be more detailed and documented. There will also being comparing and contrasting of the two products. Both products are part of larger projects and there are many useful tools that work with the BI software that you will want to investigate. More on those in later posts.

And in a short time you should be able to download a Virtual Box image with both community BI programs and a InfiniDB instance with some data sets. This way you can test all three simply. I would also consider doing a VMWare version if there is demand for it.

Both are easy to download and install. Both will use a JDBC connector to connect to MySQL. But how easy are the two to configure and run a simple report against a running instance of MySQL?

Setting up a JDBC connection with JasperSoft or Pentaho is pretty much like using any other JDBC connection.

The next step is to setup a query like SELECT name, job_title, department FROM employees, departments WHERE employees.emp_id = departments.emp_id. Either package will let you pick a variety of output templates. Then you have the BI software merge your query with the template. I honestly think an average MySQL DBA could fairly quickly generate a nice looking report from their instance and that JasperSoft would be just a little bit faster.

In part two of this series, the steps will be more detailed and documented. There will also being comparing and contrasting of the two products. Both products are part of larger projects and there are many useful tools that work with the BI software that you will want to investigate. More on those in later posts.

And in a short time you should be able to download a Virtual Box image with both community BI programs and a InfiniDB instance with some data sets. This way you can test all three simply. I would also consider doing a VMWare version if there is demand for it.

Friday, July 9, 2010

New home for Dallas MySQL Users Group

The Dallas Oracle Users Group has voted unanimously to accept the North Texas MySQL Users Group as a special interest group within their organization. This will give us wide exposure to the local DBA world, provide more venues for presentation (Irving and Plano), and the ability to have food & drink at meetings.

New presentations are being worked on for presentations including 'No SQL -- is traditional row based SQL in trouble'? Watch here for meeting information.

New presentations are being worked on for presentations including 'No SQL -- is traditional row based SQL in trouble'? Watch here for meeting information.

Wednesday, June 30, 2010

Doing your own on-time flight time analysis Part III

In the last post, the data from the on-time flight database was loaded in a column-orientated storage engine. Now the numbers can be crunched.

The original goal of this exercise was to find the flight from Los Angeles International Airport, LAX, to Dallas Fort Worth International Airport, DFW, that was the most likely to arrive on-time.

The data is 'opportunity rich' in that there is a lot information in there. It is easy to start wondering about the various nuggets of information in there. Are their certain aircraft (tail numbers) that are routinely bad performers? Are some days of the week better than others? Do national holidays have an effect on the on-time performance? If you are delayed, is there a 'regular amount' of delay? Does early departure make for an early arrival? Can the flight crew make up for a late departure? How much time is usually spend on runways?

But to look for the flight from LAX to DFW with the lowest arrival delay1.

So there are lots of good questions to ask with this data but it ends this series on doing your own on-time flight analysis. If anyone digs into the flight data further, please let the rest of us know what you are finding.

1. For the data on hand the answer is American Airlines flight 2463.

The original goal of this exercise was to find the flight from Los Angeles International Airport, LAX, to Dallas Fort Worth International Airport, DFW, that was the most likely to arrive on-time.

The data is 'opportunity rich' in that there is a lot information in there. It is easy to start wondering about the various nuggets of information in there. Are their certain aircraft (tail numbers) that are routinely bad performers? Are some days of the week better than others? Do national holidays have an effect on the on-time performance? If you are delayed, is there a 'regular amount' of delay? Does early departure make for an early arrival? Can the flight crew make up for a late departure? How much time is usually spend on runways?

But to look for the flight from LAX to DFW with the lowest arrival delay1.

SELECT FlightNum, SUM(ArrDelayMinutes) as Delay FROM flight WHERE Origin = 'LAX' AND Dest = 'DFW' GROUP BY FlighNum ORDER BY FlightNum;

So there are lots of good questions to ask with this data but it ends this series on doing your own on-time flight analysis. If anyone digs into the flight data further, please let the rest of us know what you are finding.

1. For the data on hand the answer is American Airlines flight 2463.

Thursday, June 24, 2010

Doing your own on-time flight analysis, Part II

The On Time Flight Data (see previous entry) has 93 rows per flight and the first line of the CSV file has the names of the columns. Use head -1 on that file to obtain that information and to build a schema by piping the output to a file (and do not forget to remove that top line before loading the data).

Strip out the quotation marks, add CREATE TABLE flight ( at the front and ) ENGINE=InfiniDB; at the end. Year is the title of the first column and it has been renamed to FlightYear to avoid confusion with the function of the same name. The next part is to assign a data type to each column and a quick perusal of the data will show which fields are numeric, which ones are alphanumeric, and the one column of type DATE. Personal preference on the designation are left up to the reader as an exercise in data architecture.

CREATE TABLE flight (FlightYear smallint,

Quarter tinyint,

Month tinyint,

...

Div5WheelsOff int,

Div5TailNum int) engine=InfiniDB;

Create the table by running MySQL and sourcing the file created. Now the data needs some attention.

The InfiniDB bulk loader cpimport can use any character to delimit fields. In CSV files it is painfully obvious that the fields are separated by commas. However the On Time Flight Data has a column, OriginCityName, that contains a comma. A extra column can be added after OriginCityName, the comma can be removed from that one column, OR the other commas need to be changed to another character. The easiest for most will be adding an extra column.

Next a XML file needs to be created using colxml, colxml -j 50 -d "," -l On_Time_Performance_2010_1.csv -t flight air. The the data can be loaded with cpimport, cpimport -j 50.

So for a quick test! Can I find the flights from LA back to Texas?

mysql> SELECT FlightNum, COUNT(FlightNum) FROM flight WHERE Origin = 'LAX' and Dest = 'DFW' GROUP BY FlightNum ORDER BY FlightNum;

+-----------+------------------+

| FlightNum | COUNT(FlightNum) |

+-----------+------------------+

| 2400 | 31 |

| 2404 | 30 |

| 2410 | 31 |

| 2412 | 31 |

| 2416 | 31 |

| 2422 | 31 |

| 2428 | 31 |

| 2434 | 31 |

| 2436 | 31 |

| 2440 | 31 |

| 2444 | 21 |

| 2446 | 31 |

| 2448 | 31 |

| 2450 | 26 |

| 2464 | 31 |

| 2470 | 31 |

| 2501 | 1 |

| 6219 | 31 |

| 6234 | 31 |

+-----------+------------------+

19 rows in set (0.52 sec)

Next time, find the flight least likely to be delayed!

| "Year", "Quarter", "Month", "DayofMonth", ... "Div5LongestGTime", "Div5WheelsOff", "Div5TailNum", |

Strip out the quotation marks, add CREATE TABLE flight ( at the front and ) ENGINE=InfiniDB; at the end. Year is the title of the first column and it has been renamed to FlightYear to avoid confusion with the function of the same name. The next part is to assign a data type to each column and a quick perusal of the data will show which fields are numeric, which ones are alphanumeric, and the one column of type DATE. Personal preference on the designation are left up to the reader as an exercise in data architecture.

CREATE TABLE flight (FlightYear smallint,

Quarter tinyint,

Month tinyint,

...

Div5WheelsOff int,

Div5TailNum int) engine=InfiniDB;

Create the table by running MySQL and sourcing the file created. Now the data needs some attention.

The InfiniDB bulk loader cpimport can use any character to delimit fields. In CSV files it is painfully obvious that the fields are separated by commas. However the On Time Flight Data has a column, OriginCityName, that contains a comma. A extra column can be added after OriginCityName, the comma can be removed from that one column, OR the other commas need to be changed to another character. The easiest for most will be adding an extra column.

Next a XML file needs to be created using colxml, colxml -j 50 -d "," -l On_Time_Performance_2010_1.csv -t flight air. The the data can be loaded with cpimport, cpimport -j 50.

So for a quick test! Can I find the flights from LA back to Texas?

mysql> SELECT FlightNum, COUNT(FlightNum) FROM flight WHERE Origin = 'LAX' and Dest = 'DFW' GROUP BY FlightNum ORDER BY FlightNum;

+-----------+------------------+

| FlightNum | COUNT(FlightNum) |

+-----------+------------------+

| 2400 | 31 |

| 2404 | 30 |

| 2410 | 31 |

| 2412 | 31 |

| 2416 | 31 |

| 2422 | 31 |

| 2428 | 31 |

| 2434 | 31 |

| 2436 | 31 |

| 2440 | 31 |

| 2444 | 21 |

| 2446 | 31 |

| 2448 | 31 |

| 2450 | 26 |

| 2464 | 31 |

| 2470 | 31 |

| 2501 | 1 |

| 6219 | 31 |

| 6234 | 31 |

+-----------+------------------+

19 rows in set (0.52 sec)

Next time, find the flight least likely to be delayed!

Tuesday, June 22, 2010

Doing your own on-time flight analysis, Part I

This will be a quick tutorial on looking at on-time flight analysis. This material will be part of a lab for a class on InfiniDB that I am developing. The information is from Data.Gov Website and you are free to follow the steps presented.

What I want to know is what flight from a certain airport arrives at my local airport on time the most frequently. Traveling from LAX to DFW can often be a combination of cancellations, flight delays, and being the nth plane in line for takeoff. So what is the best flight choice for that route?

The first step is getting the data. And is is available for free from Airline On-Time Performance and Causes of Flight Delays. Be sure to select the check box for documentation so that there will be a readme.html to described the file fields included in the zipped file you will download. If you pick all the available information you will receive over 500,000 lines in a .CSV file.

It is worth comparing what the readme.html file and a few sample lines of data from the .CSV file. Any programmer who has written to the specification and then found the 'real world' data is different from what was expected will expound on the value of double checking what you are 'supposed to have' and 'what you got'. In this case, for example, the FlightDate is documented as yyyymmdd but is yyyy-mm-dd in the data. This in itself is not a big deal but a little time can save aggravation later.

So in Part II, we will create a table and load up the data.

What I want to know is what flight from a certain airport arrives at my local airport on time the most frequently. Traveling from LAX to DFW can often be a combination of cancellations, flight delays, and being the nth plane in line for takeoff. So what is the best flight choice for that route?

The first step is getting the data. And is is available for free from Airline On-Time Performance and Causes of Flight Delays. Be sure to select the check box for documentation so that there will be a readme.html to described the file fields included in the zipped file you will download. If you pick all the available information you will receive over 500,000 lines in a .CSV file.

It is worth comparing what the readme.html file and a few sample lines of data from the .CSV file. Any programmer who has written to the specification and then found the 'real world' data is different from what was expected will expound on the value of double checking what you are 'supposed to have' and 'what you got'. In this case, for example, the FlightDate is documented as yyyymmdd but is yyyy-mm-dd in the data. This in itself is not a big deal but a little time can save aggravation later.

So in Part II, we will create a table and load up the data.

Tuesday, June 15, 2010

Anybody have a few millions lines of Apache Log files they can share?

Anybody have a few millions lines of Apache Log files they can share? I am working on a lab/demo on InfiniDB and need a few millions lines of an Apache HTTPD log file. I no longer run any large websites and would rather use real data over creating something. I will sanitize your URL so you will be anonymous, so www.YourCompanyHere.com will end up as www.abc123.com or something similar.

The demo/lab will show how to load data into the columnar InfiniDB storage engine and run some analytics against the data. Please let me know if you can help.

The demo/lab will show how to load data into the columnar InfiniDB storage engine and run some analytics against the data. Please let me know if you can help.

Wednesday, June 9, 2010

InfinDB Extent Maps saving I/O

InfiniDB uses Extent Maps to retrieve data. Data is stored in extents and each extent is a logical block of space that holds the same number of rows.

InfiniDB uses Extent Maps to retrieve data. Data is stored in extents and each extent is a logical block of space that holds the same number of rows. The Extent Map catalogs all extents and their corresponding blocks. The Extent Map also maintains minimum and maximum values for a column’s data within an extent.

The Extent Map lets InfiniDB to retrieve only the blocks needed to satisfy a query. There is another benefit – that of logical range partitioning. This is accomplished via the minimum and maximum values for each extent that are contained within the Extent Map. Extent elimination is first accomplished in InfiniDB via the column-oriented architecture (only needed columns are scanned), but the process is accelerated because of the logical horizontal partitioning that is implemented in the Extent Map. So only the needed sections of the column are read. So if only the data from Col1 between 105 and 180 is requested, InfiniDB will return data from Extent 2, skipping Extents 1, 3 and 4. That saves a 75% of I/O compared to a row based storage engine.

The savings in I/O also carries over to more complex queries. If a column WHERE filter of “col1 BETWEEN 220 AND 250 AND col2 < 10000” is specified, InfiniDB will eliminate extents 1, 2 and 4 from the first column filter, then, looking at just the matching extents for col2 (i.e. just extent 3), it will determine that no extents match and return zero rows without doing any I/O at all.

The savings in I/O also carries over to more complex queries. If a column WHERE filter of “col1 BETWEEN 220 AND 250 AND col2 < 10000” is specified, InfiniDB will eliminate extents 1, 2 and 4 from the first column filter, then, looking at just the matching extents for col2 (i.e. just extent 3), it will determine that no extents match and return zero rows without doing any I/O at all.Upon system startup, the Extent Map file is read into memory and updated automatically as needed.

Thursday, May 27, 2010

InfiniDB Alpha 1.1.2 on 64-bit Windows and MySQL Workbench

This week has been rough on my computers and I had to reinstall Vista on my main Windows system. But being a 'glass half full' guy, I took it as an opportunity to load the new alphas for both InfiniDB and MySQl Workbench.

I downloaded the InfiniDB software from http://infinidb.org/downloads/cat_view/40-binary-release/137-112-alpha-binary-releases and Workbench from http://dev.mysql.com/downloads/workbench/ respectively. In stalled them in that order and started the database and then Workbench.

I was expecting to have to fiddle with configuring one or the other to get them to work together. But I received a delightful surprise. Both worked out of the chute with no customization. Congratulations to the folks writing the install scripts at both companies!

I downloaded the InfiniDB software from http://infinidb.org/downloads/cat_view/40-binary-release/137-112-alpha-binary-releases and Workbench from http://dev.mysql.com/downloads/workbench/ respectively. In stalled them in that order and started the database and then Workbench.

I was expecting to have to fiddle with configuring one or the other to get them to work together. But I received a delightful surprise. Both worked out of the chute with no customization. Congratulations to the folks writing the install scripts at both companies!

Tuesday, May 4, 2010

Getting started with InfiniDB Part I

My new job with Calpont has me scrambling to learn all the facets of the InfiniDB storage engine. For those of you new to the scene, InfiniDB is column-orientated as opposed to row-orientated and is designed for data warehouses and business intelligence. Most MySQL DBAs are probably not used to thinking sideways and hopefully this series of postings will change that.

To make things interesting, I am using real data from BP's Statistical Review of World Energy 2009. The data is a historical information on various forms of energy. And it is free to download and comes in Excel workbook format. I wanted data that was unfamiliar to me and in a format that would be commonly used in a BI setting.

The first step is to obtain and install the InfiniDB community software. Since the install instructions are extremely clear, I will not go over those steps.

Next I exported the data from the Crude oil prices 1861 to 2008 worksheet into a CSV file. Now you have choices on how to load the data into your table. The first is the good old MySQL LOAD DATA INFILE statement.

CREATE TABLE oil (`year` INT(4), price_historical DECIMAL(5,2), price_current(5,2)) ENGINE=InfiniDB;

So now we can ask for the historical average price of a barrel of oil.

To make things interesting, I am using real data from BP's Statistical Review of World Energy 2009. The data is a historical information on various forms of energy. And it is free to download and comes in Excel workbook format. I wanted data that was unfamiliar to me and in a format that would be commonly used in a BI setting.

The first step is to obtain and install the InfiniDB community software. Since the install instructions are extremely clear, I will not go over those steps.

Next I exported the data from the Crude oil prices 1861 to 2008 worksheet into a CSV file. Now you have choices on how to load the data into your table. The first is the good old MySQL LOAD DATA INFILE statement.

mysql>USE energy; LOAD DATA INFILE '/tmp/oil.csv' INTO TABLE oil FIELDS TERMINATED BY ',';Or you can use the supplied bulk loading tool. In this example the table is simple and there are only 148 rows. LOAD DATA INFLE works fine for small sets. I copied CSV file into the directory InfiniDB used for the bulk loading tool -- /usr/local/Calpont/data/bulk/data/import --, changed the commas to pipes (s/,/|/g) preferred by the bulk loader, and prepare it for the columns tructure:

# /usr/local/Calpont/bin/colxml energy -t oil -j04 Bulkload root directory : /usr/local/Calpont/data/bulk job description file : Job_04.xml 2010-05-04 12:42:21 (5364) INFO : successfully load job file /usr/local/Calpont/data/bulk/job/Job_04.xml 2010-05-04 12:42:21 (5364) INFO : PreProcessing check starts 2010-05-04 12:42:21 (5364) INFO : PreProcessing check completed 2010-05-04 12:42:21 (5364) INFO : preProcess completed, total run time : 0 seconds 2010-05-04 12:42:21 (5364) INFO : No of Read Threads Spawned = 1 2010-05-04 12:42:21 (5364) INFO : No of Parse Threads Spawned = 3 2010-05-04 12:42:21 (5364) INFO : For table energy.oil: 148 rows processed and 148 rows inserted. 2010-05-04 12:42:22 (5364) INFO : Bulk load completed, total run time : 1 seconds [root@localhost import]#Now to load the data:

/usr/local/Calpont/bin/cpimport -j 04 Running colxml with the following parameters: 2010-05-04 12:42:13 (5312) INFO : Schema: energy Tables: oil Load Files: -b 0 -c 1048576 -d | -e 10 -f CSV -j 04 -n -p /usr/local/Calpont/data/bulk/job/ -r 5 -s -u -w 10485760 -x tbl File completed for tables: energy.oil Normal exit.Almost forgot to show you the table!

CREATE TABLE oil (`year` INT(4), price_historical DECIMAL(5,2), price_current(5,2)) ENGINE=InfiniDB;

So now we can ask for the historical average price of a barrel of oil.

mysql>SELECT AVG(price_historical) AS 'Historical Avg Price' FROM oil\g +----------------------+ | Historical Avg Price | +----------------------+ | 7.772297 | +----------------------+Many of you reading this are probably muttering 'I could have done this with any storage engine except Blackhole! and you are right. The beauty behind the column orientated approach does not appear until you are working with large sets of data. In the next post we will get into large sets of data and compare speeds with other storage engines.

Monday, May 3, 2010

High Availability MySQL Cookbook

MySQL is an easy database to get running initially but it can be tricky to run in demanding environments.  High Availability MySQL Cookbook is a relatively thin book packed with information.

High Availability MySQL Cookbook is a relatively thin book packed with information.

The first four chapters are on the care and feeding of a MySQL 7.x Cluster. It starts simply with an initial configuration, covers backups & replication, covers user defined partitioning, and covers troubleshooting node failures. The examples are short, to the point, and devoid of any unneeded filler.

Next come chapters on replication including the very tricky Multi Master Replication. Used shared storage is covered in a separate chapter from DRBD. The book finishes strongly with performance tuning.

The good points -- the book is concise and not padded with filler. The bad is that it is too short. Considering how well the author covers the topics that may not be too big a of a problem. For a novice to intermediate level MySQL DBA, this is a valuable guide on making your databases highly available. Long time MySQL DBAs will find nothing new but might appreciate the clear and concise explanations presented.

High Availability MySQL Cookbook is a relatively thin book packed with information.

High Availability MySQL Cookbook is a relatively thin book packed with information.The first four chapters are on the care and feeding of a MySQL 7.x Cluster. It starts simply with an initial configuration, covers backups & replication, covers user defined partitioning, and covers troubleshooting node failures. The examples are short, to the point, and devoid of any unneeded filler.

Next come chapters on replication including the very tricky Multi Master Replication. Used shared storage is covered in a separate chapter from DRBD. The book finishes strongly with performance tuning.

The good points -- the book is concise and not padded with filler. The bad is that it is too short. Considering how well the author covers the topics that may not be too big a of a problem. For a novice to intermediate level MySQL DBA, this is a valuable guide on making your databases highly available. Long time MySQL DBAs will find nothing new but might appreciate the clear and concise explanations presented.

Friday, April 30, 2010

No North Texas Users Group Meeting in May, new job

There will not be a May meeting of the North Texas MySQL Users Group but I am scrambling to get us a great location for the June meeting. I have left my Job with MySQL/Sun/Oracle and am no longer responsible for MySQL Certifications. That means my access to the Sun office were we have been meeting is ended also.

I am now the Customer Service Manager for Calpont and the InfiniDB storage engine. InfiniDB is column based, multi-threaded, and the community edition is open source. If you run intensive reports against InnoDB or MyIsam databases, you need to investigate this product. And if you regularly run massive queries in data warehousing or business analytic operation that you need to see how the massively parallel process architecture of the enterprise product can make life easier.

I am now the Customer Service Manager for Calpont and the InfiniDB storage engine. InfiniDB is column based, multi-threaded, and the community edition is open source. If you run intensive reports against InnoDB or MyIsam databases, you need to investigate this product. And if you regularly run massive queries in data warehousing or business analytic operation that you need to see how the massively parallel process architecture of the enterprise product can make life easier.

Monday, April 19, 2010

Book Review: MySQL Admin Cookbook

Usually I try to avoid the cookbook type of computer books because usually the 'recipies', often messy scripts or pages of obfuscated code, seem only to work for the authors and not for me. So I had a little trepidation when I was asked to review the  MySQL Admin Cookbook. Daniel Schneller and Udo Schwedt manage to pack a lot of very solid information into 360 pages of text that would work for novice to intermediate MySQL DBAs and provide some food for thought for seasoned DBAs.

MySQL Admin Cookbook. Daniel Schneller and Udo Schwedt manage to pack a lot of very solid information into 360 pages of text that would work for novice to intermediate MySQL DBAs and provide some food for thought for seasoned DBAs.

What I liked: The material was presented with the reasons behind the recipe and pointers to useful tools. Yes, all the material is in the manuals but sometimes there are too many trees in the way for a novice so see the trees. In very calm ,concise language, the authors tackle successfully a wide range of DBA chores in a way that is easy to follow.

I would not hesitate to give this book to a novice to intermediate DBA as a tool to help them work through replication, configuration, indexes and tools.

What I did not like: Time has marched on and the references to MySQL Administrator make a few tiny sections seem dated but I am sure only the Workbench team knew what that product would evolve into. Also the section on monitoring is a bit light but then most DBAs do not need to wade in as deeply as the book goes let alone into Enterprise Monitor or other tools.

So this book is a solid four out of five stars and well worth being brought home by those new to MySQL DBA work and those seeking 'recipes' for thie instances.

MySQL Admin Cookbook. Daniel Schneller and Udo Schwedt manage to pack a lot of very solid information into 360 pages of text that would work for novice to intermediate MySQL DBAs and provide some food for thought for seasoned DBAs.

MySQL Admin Cookbook. Daniel Schneller and Udo Schwedt manage to pack a lot of very solid information into 360 pages of text that would work for novice to intermediate MySQL DBAs and provide some food for thought for seasoned DBAs.What I liked: The material was presented with the reasons behind the recipe and pointers to useful tools. Yes, all the material is in the manuals but sometimes there are too many trees in the way for a novice so see the trees. In very calm ,concise language, the authors tackle successfully a wide range of DBA chores in a way that is easy to follow.

I would not hesitate to give this book to a novice to intermediate DBA as a tool to help them work through replication, configuration, indexes and tools.

What I did not like: Time has marched on and the references to MySQL Administrator make a few tiny sections seem dated but I am sure only the Workbench team knew what that product would evolve into. Also the section on monitoring is a bit light but then most DBAs do not need to wade in as deeply as the book goes let alone into Enterprise Monitor or other tools.

So this book is a solid four out of five stars and well worth being brought home by those new to MySQL DBA work and those seeking 'recipes' for thie instances.

Friday, April 16, 2010

2010 UC Cert Wrap-up

The 2010 MySQL is over but many have left with certifications among their souvenirs. 150 exams were given this year and we now have three new Associates, two new cluster DBAs, two new 5.1 DBAs, twelve new developers, and thirty-eight new 5.0 DBAs! Some folks walked away with two or three new certifications. Those were just the folks who tested this year and does not count those who finished up a 5.0 DBA or 5.0 Developer cert at the UC!

Thank you to all who took exams this year. It takes a few days for the exam data to peculate into the Sun Certification Database but your certification kits will be posted ASAP.

A big thank you to Carey Hardey and Shankar Kondur who ran certifications this year. An Oracle travel ban kept me from attending this year and Carey, from the Oracle University Certification Department volunteered to travel down the treacherous Highway 101 to oversee the exams. Shankar is from Devsquare and made sure the new plaftform for the hands on exam worked properly for the UC.

Thank you to all who took exams this year. It takes a few days for the exam data to peculate into the Sun Certification Database but your certification kits will be posted ASAP.

A big thank you to Carey Hardey and Shankar Kondur who ran certifications this year. An Oracle travel ban kept me from attending this year and Carey, from the Oracle University Certification Department volunteered to travel down the treacherous Highway 101 to oversee the exams. Shankar is from Devsquare and made sure the new plaftform for the hands on exam worked properly for the UC.

Thursday, April 15, 2010

Last day of testing at the MySQL Users Conference

Today is you last day to take MySQL Certification Exams at a deeply discounted price. So if you want to test, head over before the doors close on the Magnolia room for 2010!!

Wednesday, April 14, 2010

Results of Certification Exams from First Day of the MySQL Users Conference

Please note that Oracle Univerisity has placed a hard limit on number of exams at the Users Conference this year. If you wait to the last moment, you may not get a chance to show your knowledge!

Congratulations to those who took certification exams at the MySQL Users Conference this year. We have 18 new 5.0 DBAs and 2 new 5.0 Developers after Monday.

Testing is in the Magnolia Room in the Hyatt, near the front desk and starts at 8:30 AM PDT.

Congratulations to those who took certification exams at the MySQL Users Conference this year. We have 18 new 5.0 DBAs and 2 new 5.0 Developers after Monday.

Testing is in the Magnolia Room in the Hyatt, near the front desk and starts at 8:30 AM PDT.

Tuesday, April 6, 2010

Certification at the MySQL Users Conference

If you are planning to take a certification exam at the MySQL Users conference next week, please bring a laptop with WiFi and plan to come early! Testing will be in the Magnolia Room by the escalators in the Hyatt Lobby from 8:30 AM to 3:00 PM starting Tuesday.

The exams are online which means you will need a laptop.

And this year there is a limit of 250 exams total. Once that hard limit is reached, there will be no more exams given at the UC in 2010. So do not wait to the last minute to finish off that certification.

The exams are online which means you will need a laptop.

And this year there is a limit of 250 exams total. Once that hard limit is reached, there will be no more exams given at the UC in 2010. So do not wait to the last minute to finish off that certification.

Thursday, April 1, 2010

North Texas Users -- InnoDB Plugin

The InnoDB plugin will be the subject of the next North Texas MySQL Users Group on Monday April 5th. The InnoDB plugin has some big performance and scaling benefits. But there are some messy things you have to take care of before it will work.

All are welcome!

Monday April 5th

7:00 PM

Suite 700

16000 North Dallas Tollway

Dallas, TX

Monday, March 8, 2010

InnoDB plugin training

MySQL is a constantly moving target and keeping up on the latest changes can be a difficult chore. A lot of folks seeking certification are often swamped by evolutions in the MySQL product. On Tuesday there is a FREE web seminar where MySQL Professional Services experts will walk you through best practices for achieving performance and scalability improvements using MySQL 5.1 and the new InnoDB Plugin. This is part two of the series and you do not have to have seen part one to join.

List of Web Seminars

List of Web Seminars

Monday, February 22, 2010

Dallas MySQL Users Group meeting March 1st

March 1st Meeting

Workbench and Future

Workbench suddenly has a new bunch of features and that will be the main subject of Monday's meeting. This visual design, documentation, and all around database tool is quickly evolving into a 'must have' tool in your arsenal.

Plus, the North Texas MySQL Users Group Will be losing our home in a few months. We need to discuss if we will seek another, join the Dallas Oracle Users Group as a Special Interest Group or ???

Seven PM Sharp

Suite 700

16000 Dallas Tollway

Dallas Texas

Workbench and Future

Workbench suddenly has a new bunch of features and that will be the main subject of Monday's meeting. This visual design, documentation, and all around database tool is quickly evolving into a 'must have' tool in your arsenal.

Plus, the North Texas MySQL Users Group Will be losing our home in a few months. We need to discuss if we will seek another, join the Dallas Oracle Users Group as a Special Interest Group or ???

Seven PM Sharp

Suite 700

16000 Dallas Tollway

Dallas Texas

Thursday, February 11, 2010

DOUG Presentation -- An Introduction to MySQL

I will be presenting to the Dallas Oracle Users Group on An Introduction to MySQL : 99 Things You Probably Did Not Know on Thursday, February 18, 2010 from 4 pm – 6 pm. Refreshments sponsored by Sun. More details on the DOUG site or North Texas MySQL Users Group.

Wednesday, February 10, 2010

Exams ARE US$200

Whew! The Sun MySQL exams (310-810 through 310-815) are back to $200. I just checked the Prometric Site and was able to confirm.

Thursday, January 28, 2010

N. Texas Users Group meeting

Monday February 1st, 7:00 PM, Suite 700,Sun Offices 16000 Dallas Tollway, Dallas, Texas

Thursday, January 21, 2010

MySQL Certification Exam Price Raised

Sun has made changes in the certification procedures for all their exams including the MySQL exams. Exam vouchers are no longer offered through local Sun Sales Offices but exams can be purchased directly from Prometric. The price has been raised to $300 per exam.

The exam codes are:

Sun 310-810 Sun Certified MySQL DBA 5.0 part I

Sun 310-811 Sun Certified MySQL DBA 5.0 part II

Sun 310-812 Sun Certified MySQL Developer 5.0 part I

Sun 310-813 Sun Certified MySQL Developer 5.0 part II

Sun 310-814 Sun Certified MySQL Associate

Sun 310-815 Sun Certified MySQL 5.1 Cluster DBA

The new prices went into effect January 19th.

The exam codes are:

Sun 310-810 Sun Certified MySQL DBA 5.0 part I

Sun 310-811 Sun Certified MySQL DBA 5.0 part II

Sun 310-812 Sun Certified MySQL Developer 5.0 part I

Sun 310-813 Sun Certified MySQL Developer 5.0 part II

Sun 310-814 Sun Certified MySQL Associate

Sun 310-815 Sun Certified MySQL 5.1 Cluster DBA

The new prices went into effect January 19th.

Thursday, January 14, 2010

Publishing your MySQL Certs from the Sun Certification system

I received a question from my last entry on how to let others see your MySQL Certifications. In the past, the MySQL site had a list of those with the various certifications. Sun's certification system is different.

So what will the recipient receive? They will receive an email from The Sun Credential Verification System with a link and a authorization code to view your credentials.

- Go to the Sun Certification Database

- Enter your ID and password. The format for the ID is usually SUNnnnnnnn but it may be different. There is a First Time Users link and a link to get your ID and password (you do remember the email you used when you took that MySQL, don't you?). And if you get stuck, contact Certificiation@SUN.Com

- On the left hand menu, under Certifications will be a link for Publish Credentials.

- Here you provide an email address for where you want your certifications sent. You can also select which certification you want to share.

- You will get a chance to preview of what will be sent. The Void -- Sample Only Water mark will not be on the sent message.

So what will the recipient receive? They will receive an email from The Sun Credential Verification System with a link and a authorization code to view your credentials.

Wednesday, January 13, 2010

MySQL Certs up 48% in 2009

Despite a bad economy and switching testing partners, MySQL certifications were up 48% in 2009. Most of the growth was from the new hands-on DBA 5.1 exam with the multiple choice exams gaining roughly 10 percent. The only exception was the Associates certification which actually decreased slightly.

Congratulations to all of you how earned a certification in 2009 and thank you for your support of MySQL.

Congratulations to all of you how earned a certification in 2009 and thank you for your support of MySQL.

Thursday, January 7, 2010

Fulfillment Issues -- getting back on the right foot

MySQL Certification had a very busy 2009 with a great deal of change. Not all of the changes happened gracefully and now I am correcting problems with the fulfillment system. Regular readers will remember that MySQL Certification was integrated into the systems used by Sun Learning in mid 2009. Where things got shaky was in the processing of DBA 5.1 certificates.

MySQL Certification had a very busy 2009 with a great deal of change. Not all of the changes happened gracefully and now I am correcting problems with the fulfillment system. Regular readers will remember that MySQL Certification was integrated into the systems used by Sun Learning in mid 2009. Where things got shaky was in the processing of DBA 5.1 certificates.Previously Ricky Ho and I oversaw data entry, database updates, and fulfillment. The deeply missed Ricky did the heavy lifting in the printing and mailing of certificates. The test data from the multiple choice exams was flowing properly into the new (for MySQL) system but not for the hands-on DBA 5.1 exam. I have been going through the certification records and manually entering the data for the 5.1 DBA certificate holders. It will take a week or so to get things caught up. Meanwhile, if you are still waiting for a certificate for your MySQL 5.1 certification, please let me know at Certification@MySQL.com.

MySQl Certification exams will be offered at the 2010 O'Reilly MySQL Conference. More details will be posted here as they become available.

Saturday, January 2, 2010

North Texas 1st meeting of the year -- Workbench & Cookies

Happy New Year! Monday night is the first meeting of the North Texas MySQL Users Group for 2010! And the topic for discussion will be MySQL Workbench.

Workbench is a tool for visual database design, schema documentation, change management, and is replacing the old MySQL GUI tools. If you have not tried the latest releases, you will be for a pleasant surprise.

NorthTexasMySQL.org meets at 7:00PM sharp at the Sun Offices, Suite 700, 16000 Dallas Tollway, Dallas TX. We welcome all who want to know more about MySQL, databases, or Open Source. Admission is free and I will bring cookies!

Workbench is a tool for visual database design, schema documentation, change management, and is replacing the old MySQL GUI tools. If you have not tried the latest releases, you will be for a pleasant surprise.

NorthTexasMySQL.org meets at 7:00PM sharp at the Sun Offices, Suite 700, 16000 Dallas Tollway, Dallas TX. We welcome all who want to know more about MySQL, databases, or Open Source. Admission is free and I will bring cookies!

Subscribe to:

Comments (Atom)